Artificial intelligence has a love-hate relationship with crypto and blockchain technology: although the advent of ChatGPT has brought awareness of the full potential of AI in the world of trading, big data, and smart contracts, there are still some dangers that the crypto community needs to distance itself from.

In particular, Binance believes that through deepfakes, some malicious users are able to evade KYC verification systems by scamming companies and investors.

Crypto alert: artificial intelligence and deepfakes

First “red flag” in the cryptocurrency world regarding the much-loved artificial intelligence technology.

According to Jimmy Su, head of security at Binance, the next wave of scams for the industry will be spearheaded by deepfakes, which are spreading thanks to AI software.

Through machine learning, deepfakes are able to recreate highly plausible (but fake) images or videos from real content on networks.

In particular, these fake versions highly realistically recreate features, movements of a face, body, or even faithfully reproduce a particular voice.

In doing so, cyber-criminals are impersonating some of the prominent figures in the crypto community by carrying out well-thought-out scams.

Although this technique can be honestly and advantageously applied in daily life and work, bringing benefits to those who use it, it can be both detrimental to crypto companies and investors.

Last August, Binance‘s communications manager Patrick Hillmann warned that a group of hackers was recreating a deepfake version of him from video interviews on the web and from his TV appearances.

The “deepfake” version of Hillmann was then used in online meetings on Zoom with crypto project teams who were promised the price of tokens in exchange for payment.

The only way to combat the spread of fraud of this kind, according to Jimmy Su, is to educate users by pointing out the fallacies that artificial intelligence presents in recreating content.

Binance believes artificial intelligence can compromise the KYC verification systems of crypto projects

The crypto exchange Binance and its chief security officer believe that artificial intelligence and deepfakes can compromise KYC verification systems by putting users’ data and funds at risk.

This is not specific to Binance, where there are sophisticated verification automations, but in general to all crypto-themed projects where there is a need to verify the identity of individuals through the classic “Know your customer” (KYC) procedure.

The modus operandi for the evasion of these systems is the following: first they search online for some photos of the victim, then thanks to AI tools they start to produce highly realistic videos of which one might not notice the fake characters.

Jimmy Su argues that deepfakes are spreading mainly for the purpose of implementing scams, especially for crypto enthusiast communities.

However, he believes at the same time that the overall quality of the fake videos are not yet such that they can fool human verification.

Binance plans to publish a series of blog posts to inform users about risk management methods.

In the first content published by the exchange, it was stated that the company uses artificial intelligence and machine learning algorithms to detect anomalous login patterns and transactions on the platform.

Hence, it is possible to exploit AI for non-fraudulent purposes, but there is a need to be careful about the development of this technology because it could create significant damage in the crypto sector.

A Web3 security expert, in reference to deepfakes, believes that they could be misused in Ledger‘s new “Recover” update, where users can request the hardware building company to have the seed phrase sent to them if they lose it, after verifying their identity through KYC verification.

The best crypto assets that integrate AI technologies

More and more projects are emerging within the crypto market that integrate artificial intelligence technology into their business, in more or less concrete ways.

Sometimes the AI narrative and the appeal it has on the public are exploited to attract attention and generate trading volumes on tokens that do not actually have fundamentals behind them, but only good management of communication and marketing programs.

Most of these use within their names words such as “GPT,” “AI,” “CHATBOT,” sometimes mixing them with the brand name of some famous memecoin.

Nevertheless, the artificial intelligence trend is allowing serious projects such as Singularity Net, The Graph, Render Token, Fetch.ai, and Injective to increase their capitalization.

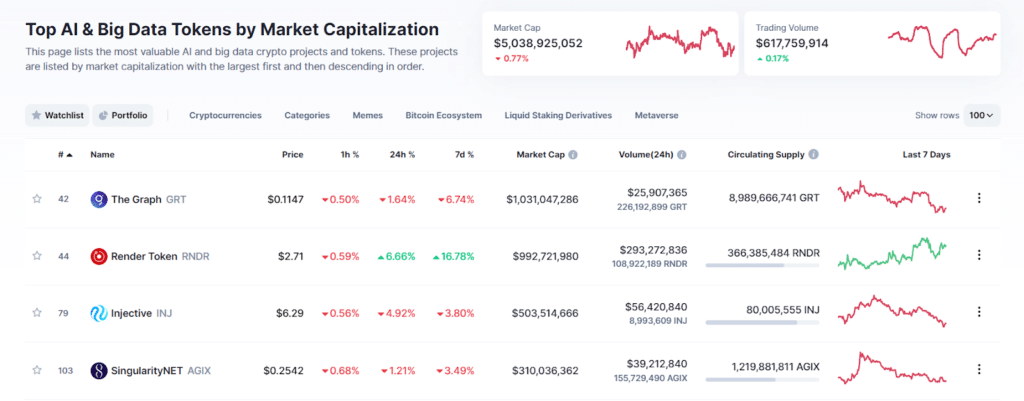

In this regard, the “AI and Big Data” section of cryptocurrencies on CoinMarketCap has surpassed the $5 billion mark, with a significant increase from the beginning of 2023.

It is probably still too early to see a true explosion of the artificial intelligence trend, given and considering that there are few truly applied use cases in the crypto world excluding those where scams are attempted.

ChatBots, automation of trading systems, and big data detection are certainly important and will be one of the drivers by which the next bullish markets will be undertaken.

However, we still have a long way to go and need to build more efficient products for a real revolution.

In the meantime, we can have fun creating a deepfake video with the face of CZ (CEO of Binance) in which the delisting of your best friend’s favorite crypto is announced, subsequently sending him the fake content and seeing how he reacts.

However, be careful to limit the prank in private channels without creating FUD within the community.

Source: https://en.cryptonomist.ch/2023/05/25/artificial-intelligence-dangerous-crypto/